Acknowledgement

These notes are based on the slides provided by the professor, Qiang Ye, for the

class "CSCI-3120 Operating Systems". I am taking/took this class in Fall

2023-2024 semester. If there are corrections or issues with the notes, please

use the contact page to let me know. A lot of the words and images are either

directly taken from the slides or have been paraphrased slightly.

CH1: Introduction

Definitions of "Operating System":

- Everything a vendor ships when you order an operating system.

- The one program running at all times on the computer.

- Actually, it is the kernel part of the operating system.

- Everything else is either system program or application program.

Computer Architecture

Computer Architecture: Logical aspects of system implementation as seen by

the programmer. Answers the question: "How to design a computer?".

Computer Organization: Deals with all physical aspects of computer systems.

Answers the question: "How to implement the design?".

Multiprocessor Systems

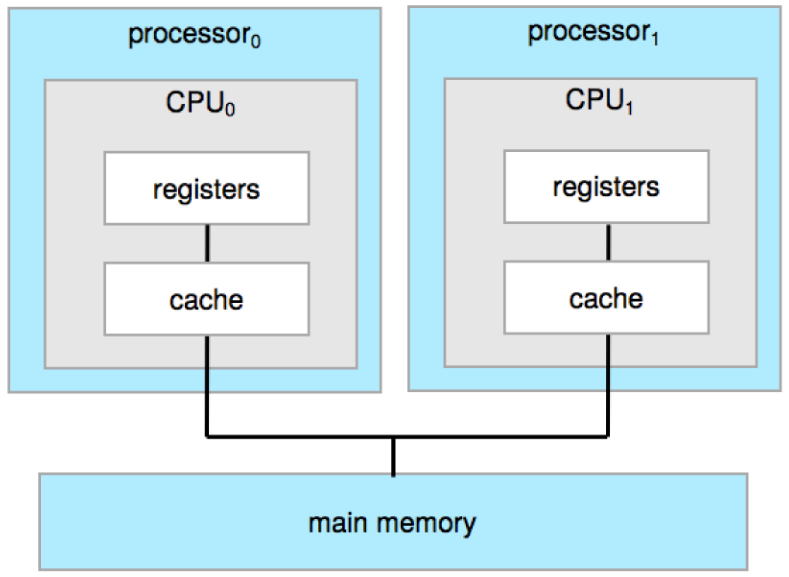

Symmetric Multiprocessing (SMP)

Each CPU processor performs all kinds of tasks, including operating-system

functions and user processes.

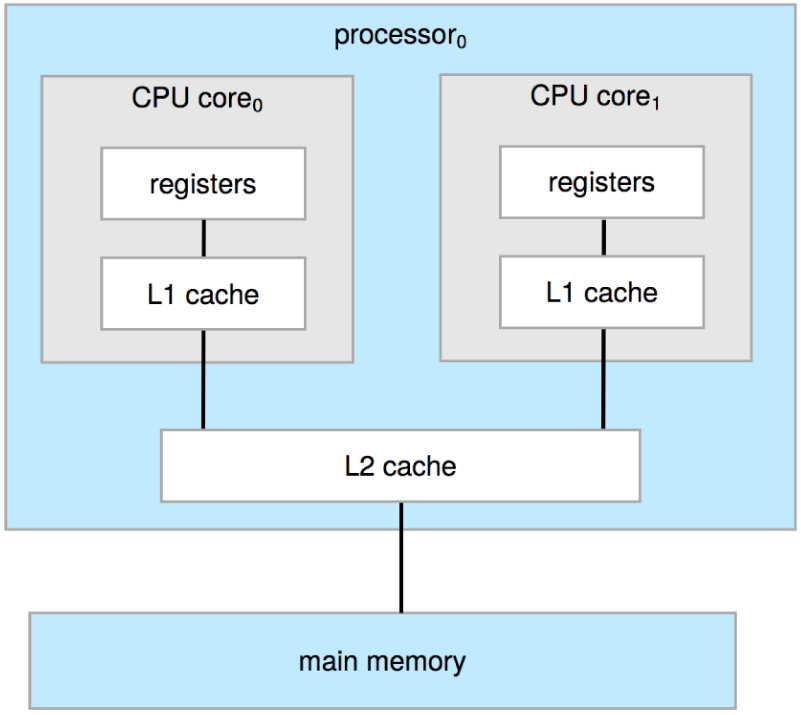

Multicore Systems

Multicore systems are systems in which multiple computing cores reside on a

single chip. Multicore systems can be more efficient than systems with multiple

processors because on-chip communication between cores is faster than

between-chip communication.

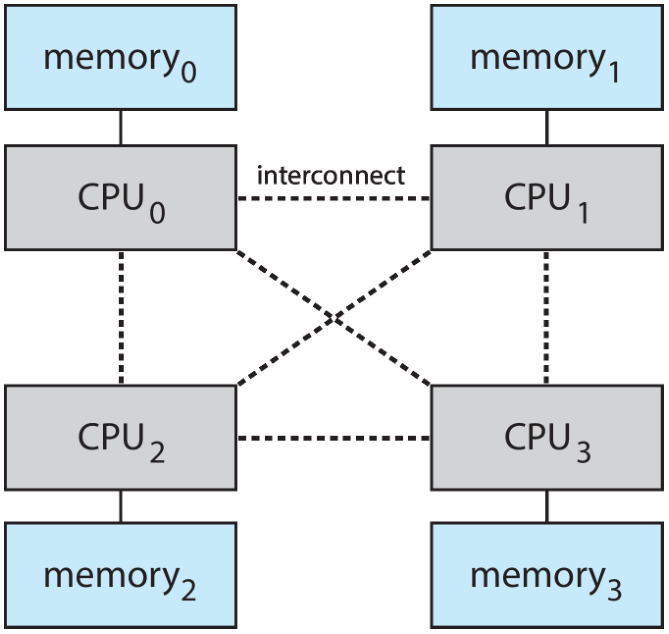

An approach to share memory is to provide each CPU (or group of CPUs) with their

own local memory that is accessed via a local bus.

Clustered Systems

Clustered systems differ from the multiprocessor systems in that they are

composed of two or more individual systems. Each individual system is typically

a multicore computer. Such systems are considered loosely coupled.

Computer Organization

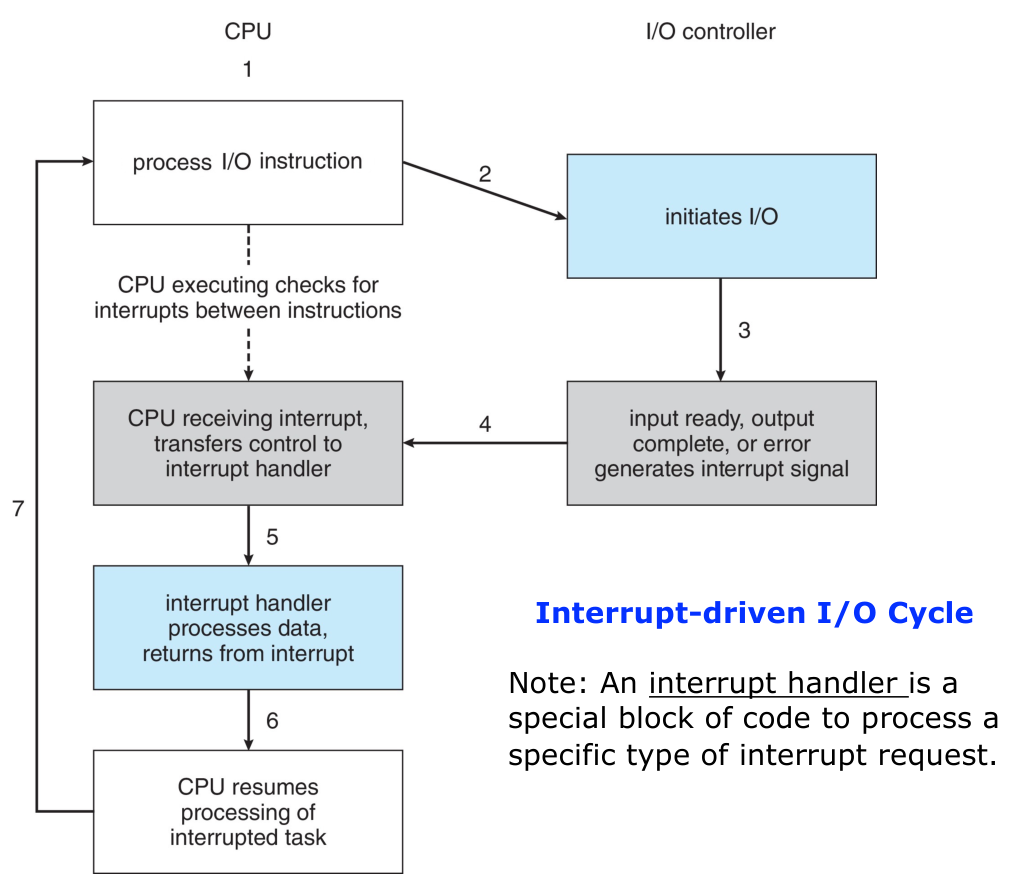

Interrupts

An interrupt is a signal to the processor emitted by hardware or software

indicating an event that needs immediate attention.

Two types of interrupts:

- Hardware Interrupts: defined at the hardware level.

- Software Interrupts: also known as trap or exception defined at the OS

level.

Operating System Operations

Bootstrap Program

When a computer starts, it needs to run an initial program to find the operating

system which then needs to be loaded. This initial program is known as

bootstrap program, tends to be simple. It initializes all aspects of the

system, from CPU registers to device controllers to memory contents. In

addition, it loads the operating-system kernel into memory. Thereafter, the OS

will take over the system.

- Power On: User turns on the computer.

- BIOS/UEFI Initialization: Basic Input/Output System (BIOS) or Unified

Extensible Firmware Interface (UEFI) starts.

- POST: Hardware check via Power-On self-test.

- Bootstrap Program Loaded: Small program that starts the boot sequence.

- Bootloader Activated: Loads and stars the operating system's kernel.

- OS Kernel Loaded: Core of the operating system is loaded into memory.

- OS Initialization: Operating system is initialized and becomes fully

operational.

Multiprogramming

The operating systems keeps several processes in memory simultaneously. The

operating system picks and begins to execute one of these processes at a time.

Eventually, the process may have to wait for some task such as I/O operation to

complete. When this event occurs, the operating system simply switches to and

executes another process.

Multitasking

Multiprogramming doesn't give any guarantee that a program will run in a timely

manner. The very first program may run for hours without needing to wait.

Multitasking is logical extension of multiprogramming where the CPU executes

multiple processes by switching among them, but the switches occur more

frequently, providing the user with a fast response time.

Multimode Operation

The operating system is responsible to ensure that hardware and software

resources of the computer system are protected from incorrect or malicious

programs including the operating system itself.

In order to ensure the proper execution of system, we must be able to

distinguish the execution of operating-system code and user-defined code. The

approach taken by most computer systems is to provide hardware support that

allows differentiation of various modes of execution.

At the least, a system must have a kernel mode (supervisor mode, privileged

mode, system mode) and a user mode. A bit, called the mode bit is added

to the hardware of the computer to indicate the current mode.

If an attempt is made to execute a privileged instruction in user mode, the

hardware does not execute the instruction. Instead, it treats it as an illegal

instruction.

-

Intel processors have 4 separate protection rings, where ring 0 is

kernel mode and ring 3 is user mode. The other modes are for things such as

hypervisors.

-

ARM v8 systems have 7 modes.

-

CPUs that support virtualisation frequently have a separate mode to

indicate when the virtual machine manager (VMM) is in control of the system.

In this mode, the VMM has more privileges than user processes but fewer than

the kernel.

History of Free Operating Systems

In the early days of modern computing (1950s), software generally came with

source code. However, companies sought to limit the use of their software to

paying customers. To counter the move to limit software use and redistribution,

in 1984, Richard Stallman started developing a free, UNIX-compatible operating

system called GNU, which is a recursive acronym for "GNU's Not Unix".

Four Essential Freedoms

The Free Software Foundation (FSF) was founded to support the free-software

movement. This was a social movement with the goal of guaranteeing four

essential freedoms for software users.

- The freedom to run the program.

- The freedom to study and change the source code.

- The freedom to redistribute copies.

- The freedom to distribute copies of the modified versions.

CH2: Operating System Structures

Services

Operating systems provide a collection of services to programs and users. These

are functions that are helpful to the user.

These are some areas where functions are provided:

- User Interface

- Program execution

- I/O operations

- File-system manipulation

- Communications (IPC)

- Error detection.

Another set of OS functions exist to ensure the efficient operation of the

system itself.

- Resource allocation: CPU, Memory, file storage etc.

- Accounting: Logging, tracking.

- Protection and Security: Owners managed and maintained.

User Interfaces

Command-Line Interface (CLI)

The command-line interface (a.k.a. command interpreter) allows direct command

entry. The CLI fetches a command from the user and executes it.

There are two types of commands:

- Built-in commands: These are a part of the CLI program.

- External commands: These commands correspond to independent programs.

You can check if a command is a built-in or an external using the type

built-in command.

$ type type

type is a shell builtin

$ type cd

cd is a shell builtin

$ type cat

cat is /usr/bin/cat

System Calls

System calls provide an interface to services made available by an operating

system. System calls are generally available as functions written in C and C++.

Certain low-level tasks may have to be written using assembly-language

instructions.

However, most programmers do not use system calls directly. Typically,

application developers design programs according to a high-level API. The API

specifies a set of functions that are available to an application programmer.

Example: read and write functions in C (using the stdio library) are

actually wrappers around the corresponding read and write system calls.

Types of System Calls

- Process control

- create/terminate process

- load/execute process

- get/set process attributes

- wait/signal events

- allocate and free memory.

- File management

- create/delete files

- open/close files

- read/write/reposition file

- get/set file attributes.

- Device management

- request/release device

- read, write, reposition

- get/set device attributes

- logically attach or detach devices.

- Information maintenance

- get/set time, date

- get/set system data.

- Communications

- create/delete communication connection

- send/receive messages

- transfer status information

- attach/detach remote devices.

- Protection

- get/set file permissions

- allow/deny user access.

The following table illustrates various equivalent system calls for Windows and

UNIX operating systems.

| Category |

Windows |

Unix |

| Process control |

CreateProcess() |

fork() |

| ExitProcess() |

exit() |

| WaitForSingleObject() |

wait() |

| File management |

CreateFile() |

open() |

| ReadFile() |

read() |

| WriteFile() |

write() |

| CloseHandle() |

close() |

| Device management |

SetConsoleMode() |

ioctl() |

| ReadConsole() |

read() |

| WriteConsole() |

write() |

| Information maintenance |

GetCurrentProcessID() |

getpid() |

| SetTimer() |

alarm() |

| Sleep() |

sleep() |

| Communications |

CreatePipe() |

pipe() |

| CreateFileMapping() |

shm_open() |

| MapViewOfFile() |

mmap() |

| Protection |

SetFileSecurity() |

chmod() |

| InitializeSecurityDescriptor() |

umask() |

| SetSecurityDescriptor() |

chown() |

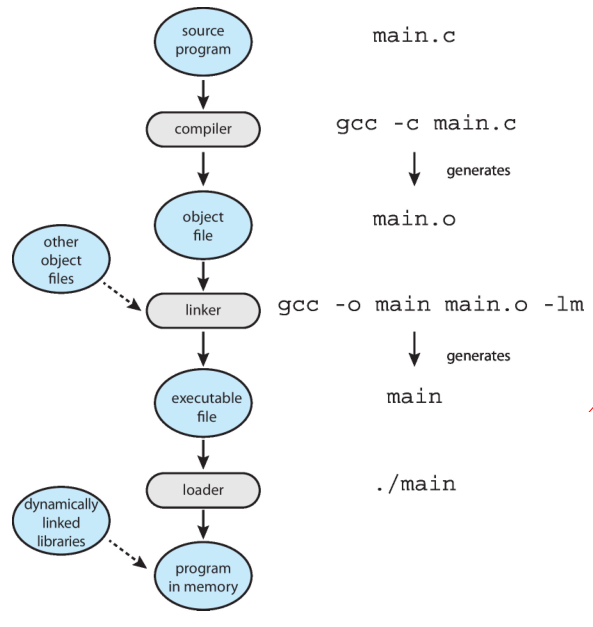

Compiler, Linker, and Loader

-

Compiler: Source code needs to be compiled into object files. Object files

are designed to be loaded into any physical memory location.

-

Linker: Linker combines object codes into a binary executable file. If the

object code corresponding to a library is needed, the library code is also

linked into the executable file.

-

Loader: Executable files must be brought into memory by the loader to be

executed.

This is statically linking the libraries to the program. However, most systems

allow a program to dynamically link libraries as the program is loaded. Windows,

for instance, supports dynamically linked libraries (DLLs).

The benefit of this approach is that it avoids linking and loading libraries

that may end up not being used by an executable file. Instead, the library is

conditionally linked and is loaded if it is required during program run time.

Aside on Dynamic Libraries

Dynamically Linked Libraries (DLLs) on windows and shared libraries (on UNIX)

are kinda-sorta the same thing. The are loaded on requirement into the program

when the program requires it.

The main advantage of shared libraries is the reduction of used memory (in my

opinion). Since it's the same library that is loaded into multiple programs, if

a program has already loaded a library, then a new program that requires it is

only given a mapping to it and the memory is not actually copied. This means

that each program THINK it has a separate copy of the library but the in memory

there is only one copy of it. So functions like printf or read, that are

used a lot, are only loaded into memory ONCE rather than a few hundred times.

Object files and executable files typically have standard formats that include:

- The compiled machine code

- A symbol table containing metadata about functions

- Variables that are references in the program.

For UNIX/Linux systems, this standard format is known as Executable and

Linkable Format (ELF). Windows systems use the Portable Executable (PE)

format. MacOS uses the Mach-O format.

Operating System Design

- User goals: operating system should be convenient to use, easy to learn,

reliable, safe, and fast.

- System goals: operating system should be easy to design, implement, and

maintain, as well as flexible, reliable, error-free, and efficient.

Operating System Structure

A system as large and complex as a modern operating system must be engineered

carefully. A common approach is to partition the task into small components.

Each of these modules should be a well-defined portion of the system, with

carefully defined interfaces and functions.

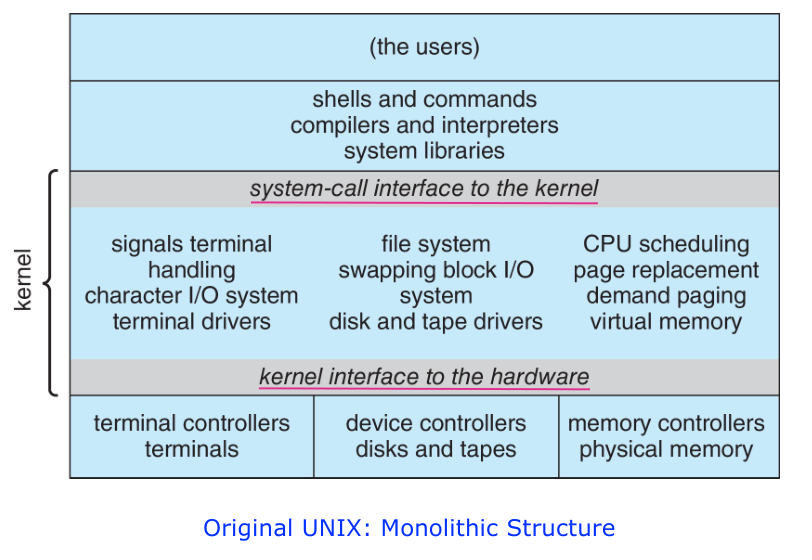

Monolithic Structure

The simplest structure for organizing an operating system is no structure at

all. That is, place ll the functionality of the kernel into a single, static

binary file that runs in a single address space.

An example of this type of structure is the original UNIX operating system,

which consists of two separable parts: Kernel, System programs.

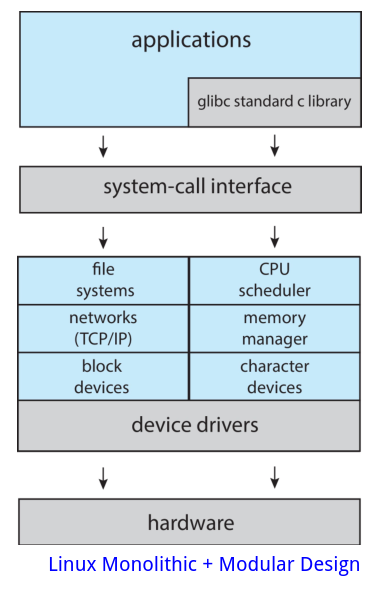

The Linux operating system is based on UNIX and is structured similarly, as

shown in the below figure. Applications typically use the standard C library

(note that the standard C on Linux is called glibc) when communicating with

the system call interface to the kernel.

The Linux kernel is monolithic in that it runs entirely in kernel mode in a

single address space. However, it does have a modular design that allows the

kernel to be modified during run time. This is discussed in a later section.

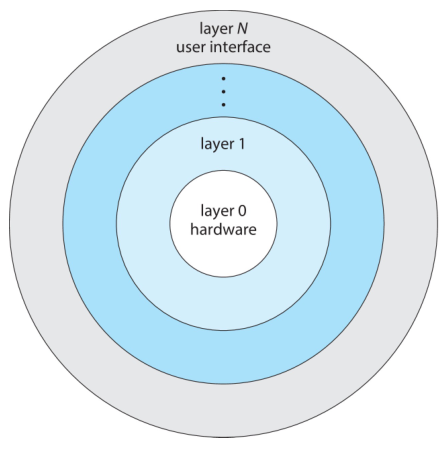

Layered Structure

-

The operating system is divided into a number of layers (levels), each built

on top of lower layers.

-

The bottom layer (layer 0), is the hardware; the highest (layer N) is the user

interface.

-

With modularity, layers are selected such that each layer only uses functions

and services provided by the layer immediately below it.

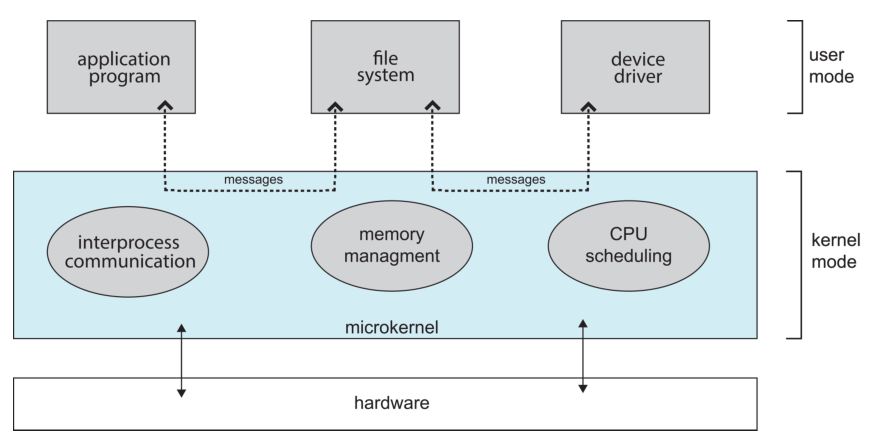

Microkernel Structure

- As UNIX expanded, the kernel became large and difficult to manage.

- In 1980s researchers at Carnegie Mellon University developed an operating

system called "Mach" that modularized the kernel using the microkernel

approach.

- This method structures the operating system by:

- removing all non-essential components from the kernel

- implementing the non-essential components as user-level programs that

reside in separate address spaces, which results in a smaller kernel.

- Typically, microkernels provide minimal:

- process management (i.e. CPU scheduling)

- memory management

- communications (IPC).

The main function of microkernel is to provide communication between the client

program and the various services that are also running in user space.

Communication is provided through message passing.

The performance of microkernels can suffer due to increased overhead. When two

user-level services must communicate, messages must be copied between the

services, which reside in separate address spaces. In addition, the operating

system may have to switch from one process to the next to exchange the messages.

The overhead involved in copying messages and switching between processes has

been the largest impediment to the growth of microkernel-based operating

systems.

Modular Structure

Perhaps the best current methodology for operating-system design involves using

loadable kernel modules (LKMs). The kernel has a set of core components and

can link in additional services via modules, either at boot or during run time.

This type of design is common in modern implementations of UNIX (such as Linux,

MacOS, and Solaris) as well as Windows.

The modular structure resembles the layered structure in that each kernel

section has defined, protected interfaces. However, modular structure is more

flexible than a layered system, because any module can call any other module.

Hybrid Structure

Most modern systems do not follow any strictly-defined structure. Instead, they

adopt the hybrid structure, which combines multiple approaches to address

performance, security, usability issues.

Examples:

Linux is monolithic, because having the operating system in a single address

space provides very efficient performance. However, it is also modular, so that

new functionality can be dynamically added to the kernel.

Building and Booting Linux

Here's a guide to building the Linux kernel yourself.

- Download Linux source code (https://www.kernel.org).

- Configure kernel using the command

make menuconfig.

- This step generates the

.config configuration file.

- Compile the main kernel using the command

make.

- The make command compiles the kernel based on the configuration parameters

identified in the

.config file, producing the file vmlinuz, which is

the kernel image.

- Compile kernel modules using the command

make modules.

- Just as with compiling the kernel, module compilation depends on the

configuration parameters specified in the

.config file.

- Install kernel modules into

vmlinuz using the command make modules_install.

- Install new kernel on the system using the command

make install.

- When the system reboots, it will begin running this new operating systems.

System Boot

BIOS

For legacy computers, the first step in the booting process is based on the

Basic Input/Output System (BIOS), a small boot loader.

Originally, BIOS was stored in a ROM chip on the motherboard. In modern

computers, BIOS is stored on flask memory on the motherboard so that it an be

rewritten without removing the chip from the motherboard.

- When the computer is first powered on, the BIOS is executed.

- This initial boot loader usually does nothing more than loading a second boot

loader, which is typically located at a fixed disk location called the boot

block. Typically, the second boot loader is a simple program and only knows

the address and the length of the remainder of the bootstrap program.

- In the typical scenario, the remainder of the bootstrap program is executed

to locate the OS kernel.

UEFI

For recent computers, the booting process is based on the Unified Extensible

Firmware Interface (UEFI).

UEFI has several advantages over BIOS:

- Better support for larger disks (over 2TB)

- Flexible pre-OS environment: UEFI can support remote diagnostics and repair

computers, even with no operating system installed.

Details found here:

wikipedia

CH3: Processes

Modern computer systems allow multiple programs to be loaded into memory and

executed concurrently. Formally, a process is a program in execute.

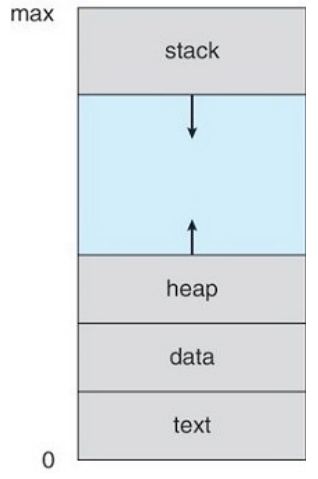

A process has a layout in memory that looks like the following diagram:

- Text: Contains executable code.

- Data: Global variables.

- Heap: Memory that is dynamically allocated during runtime.

- Stack: Temporary data storage when functions are invoked.

The text and data sections are fixed as their sizes do not change during

program run time. The stack and heap however, can grow and shrink

dynamically during the program execution.

Each time a function is called, an activation record containing function

parameters, local variables, and the return address is pushed onto the stack;

when control is returned from the function, the activation record is popped from

the stack.

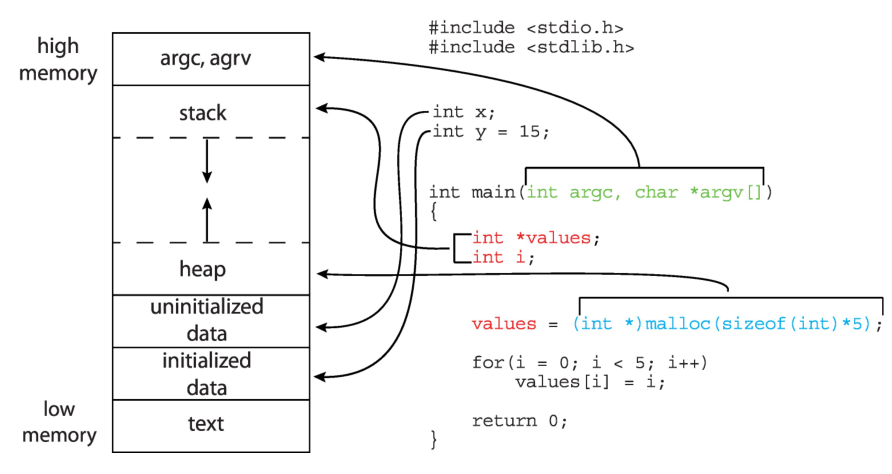

Memory Layout of a C program

- The data section is divided into two sub-sections: initialized and

uninitialized data.

- A separate section is provided for the

argc and argv parameters passed to

the main() function from the operating system.

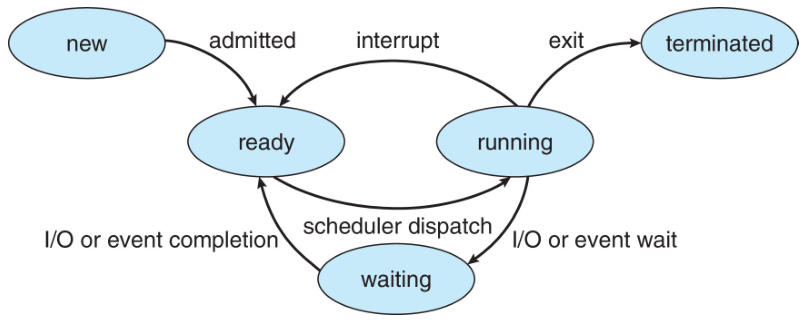

Process State

As a process executes, it can change state. The state of a process is defined in

part by the activity of that process.

The possible states a process can be in:

- New: The process is created.

- Running: Instructions in the process are being executed.

- Waiting: The process is waiting for some event to occur.

- Ready: The process is waiting to be assigned to a processor.

- Terminated: The process has been terminated.

Note that the state names are generic and they vary across operating systems.

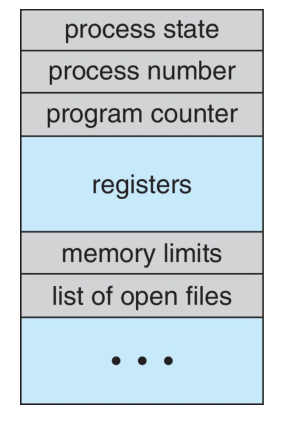

Process Control Block

The Process Control Block (PCB), also known as Task Controlling Block (TCB), is

a data structure in the OS kernel, which contains the information needed to

manage the scheduling of a process.

- State: state of the process.

- Number: process ID.

- Program counter: location of instruction to execute.

- CPU registers: contents of all process-related registers.

- CPU scheduling information: process priority, points to queues etc.

- Memory management information: memory allocated to the process.

- Accounting information: CPU used, clock time elapsed since state, time limits.

- IO status information: I/O devices allocated to process, list of open files, etc.

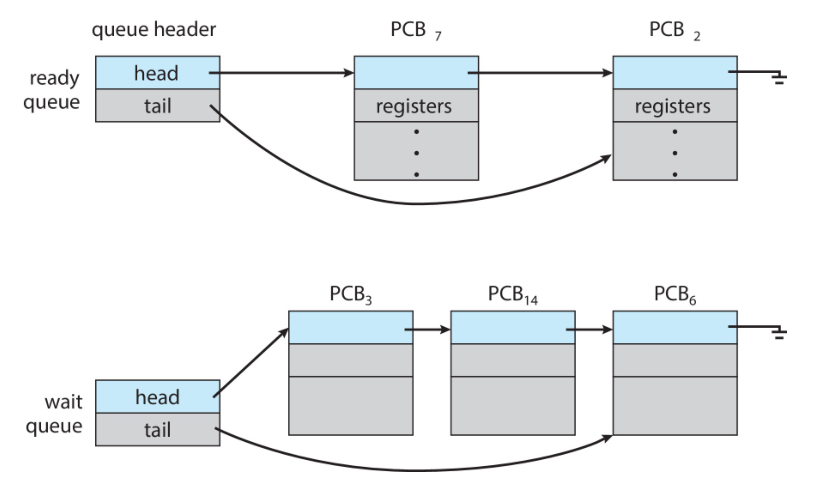

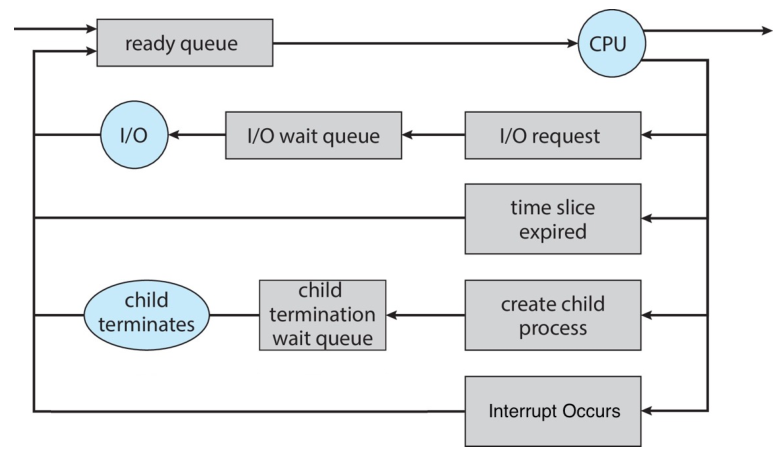

Scheduling

In a computer running concurrent processes, the OS needs to schedule processes

effectively. The number of processes currently in memory is known as the

degree of multiprogramming.

Most processes can be described as either I/O bound (spends most of its time

doing I/O operations) or CPU bound (spends most of its time doing

computations).

To schedule processes effectively, an OS typically maintains multiple scheduling

queues, such as the ready queue and the wait queue.

-

Ready queue: a set of processes residing in main memory, ready and waiting

to be executed.

-

Wait queue: a set of processes waiting for an event (e.g. I/O).

Here is an example of a queueing diagram:

CPU Scheduler

The role of the CPU scheduler is to select a process from the processes in

the ready queue and allocate a CPU core to the selected one. To be fair to all

processes and guarantee timely interaction with users, the CPU scheduler must

select a new process for the CPU frequently.

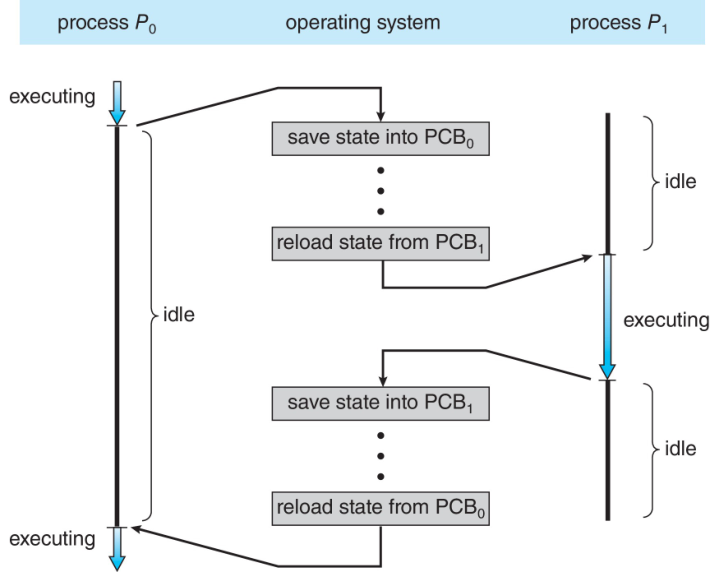

Context Switching

Switching the CPU core to another process requires performing a state save of

the current process and a state restore of a different process. This task is

known as a context switch. When a context switch occurs, the kernel saves

the context of the old process in its PCB and loads the saved context of the new

process.

Context switch time is overhead, because the system does not do useful work

while switching. Switching speed varies from machine to machine, depending on

memory speed, number of registers etc. Typically, it takes several microseconds.

Process Creation

The OS needs to provide mechanisms for process creation and process termination.

The creating process is called a parent process, and the created process is

called the child process of the parent process.

A child process may in turn create other processes, forming a tree of

processes.

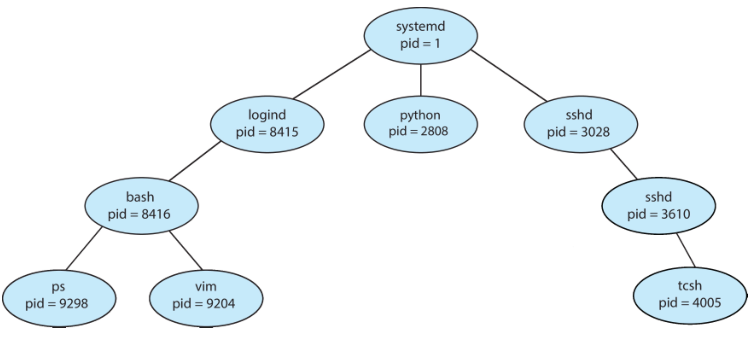

The figure above is a typical tree of processes for the Linux operating system,

showing the name of each process and its pid. Most operating systems identify

each process using a unique process identifier (pid), which is typically an

integer number.

The systemd process (init on Arch Linux) always has a pid of 1 and servers

as the root process for all user processes, and is the first user process

created when the system boots.

When a process creates a new process, two possibilities exist for its execution:

- The parent continues to execute concurrently with the children.

- The parent waits till some or all of its children have terminated.

There are also two address-space possibilities for the new process:

- The child process is a duplicate of the parent process, it has the same

data as the parent (see note under for more details).

- The child process has a new program loaded into itself.

NOTE (Additional details): When a child process is created on Linux, the OS

maps the same physical memory of the process to two separate processes. Although

each process THINKS they have an identical but separate copy of the data, the

data is only stored once. However, if there is a write operation performed on

the data, then that data that was written to is first copied to have separate

physical versions. Linux follows the "copy-on-write" principle

(https://en.wikipedia.org/wiki/Copy-on-write).

Creating Processes On Unix

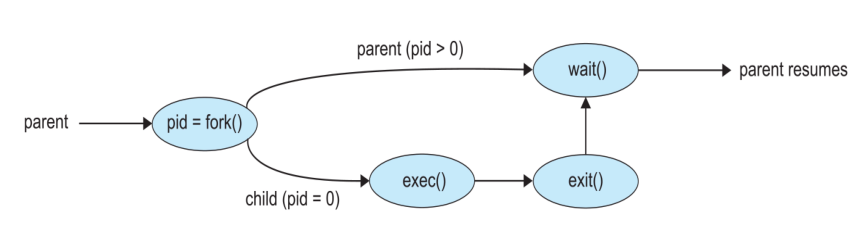

On Unix, a child process is created by the fork() system call. The parent and

child process continue to be executed and the next instruction is the one right

after the fork(), with one difference: The return code for the fork() is

zero for the child process and is a positive process identifier of the child for

the parent process. If fork() leads to a failure, it return -1.

- Parent:

fork() returns child process ID.

- Child:

fork() returns 0.

After a fork() system call, the child process can use one of the exec()

family of system calls to replace the process's memory space with a new program.

After a fork() the parent cal issue a wait() system call to move itself to

the wait queue till the termination of the child.

When the child terminates, the wait() system call in the parent process is

completed and the parent process resumes its execution.

Process Creation In C

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/wait.h>

int main()

{

int execlp_status;

int wait_status;

pid_t pid;

/* fork a child process */

pid = fork();

if (pid < 0) { /* error occurred */

fprintf(stderr, "Fork Failed");

return 1;

}

else if (pid == 0) { /* child process */

execlp_status=execlp("ls","ls","-l",NULL);

if (execlp_status == -1) {

printf("Execlp Error!\n");

exit(1);

}

}

else { /* parent process */

/* parent will wait for the child to complete */

wait(&wait_status);

printf("Child Complete!\n");

}

return 0;

}

pid_t pid is a variable created to hold the process ID.fork() creates a child process.execlp() is one of the exec() family of system calls.wait(&wait_status) waits for the child.wait() returns the PID of the process that ended (useful if wait called with

multiple processes).wait_status holds the exit status of the child that exited.

To learn more about any of these functions, you can use the man command to

read their manual. There also exists a function waitpid for more control on

the wait command. man 2 wait will how the details of this function.

Useful man pages:

man 2 fork

man 2 wait

man pid_t

man execlp # shows the family of exec functions

If you want your man pages to look better follow the instructions here:

Pretty man pages.

Exec Family

The exec() family includes a series of system calls, we focus on two of them:

execlp() and execvp().

- The

l indicates a list arrangement, the execlp() function uses a series of

NULL terminates arguments.

- The

v indicates an array arrangement, the execvp() function uses an arrays

of NULL terminated arguments.

- The

p indicates the current value of the environment variable PATH to be

used when the system searches for executable files.

TODO: Insert details of each of the functions and how to use them.

Interprocess Communication

Processes executing concurrently can be divided into two categories:

- Independent: if it does not share data with any other processes executing

in the system.

- Cooperating: if it can affect or be affected by the other processes

executing in the system. Clearly, any process that shares data with other

processes is a cooperating process.

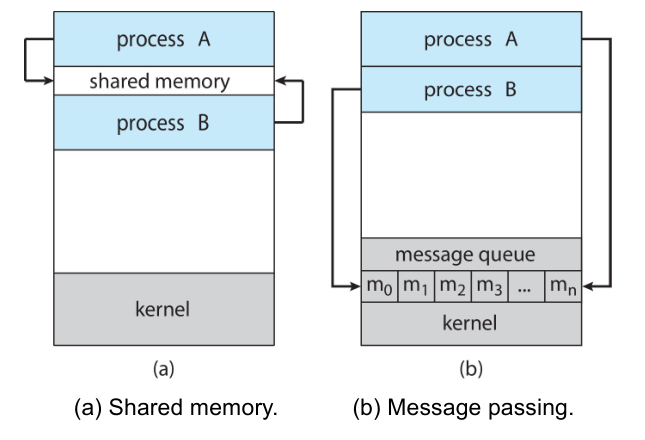

Cooperating processes require an interprocess communication (IPC) mechanism

that will allow them to exchange data. There are two fundamental models for

interprocess communication: shared memory and message passing.

Shared Memory

This uses shared memory which requires communicating processes to establish a

region of shared memory.

Typically, a shared-memory region resides in the address space of the process

creating the shared-memory segment. Other processes that wish to communicated

using this shared-memory segment must attach it to their address space.

Consider a producer-consumer problem:

- A producer process produces information that is required by other processes.

- A consumer process reads and uses information is produced by the producer.

We create a buffer that can be filled by the producer and emptied by the

consumer. An unbounded buffer places no practical limit on the size of the

buffer. The bounded buffer assumes a fixed buffer size. In the second case,

the consumer must wait if buffer is empty and the producer must wait if buffer

is full.

#define BUFFER_SIZE 10

typedef struct {

// ...

} item;

item buffer[BUFFER_SIZE];

int in = 0;

int out = 0;

The shared buffer is implemented as a circular array with two logical

pointers in and out.

The producer uses the following code to add items to the buffer:

item next_produced;

while (true) {

/* Produce and item in next_produced */

while (((in + 1) % BUFFER_SIZE) == out) {

// do nothing while full.

}

buffer[in] = next_produced;

in = (in + 1) % BUFFER_SIZE;

}

The consumer uses the following code to read items from the buffer:

item next_consumed;

while (true) {

while (in == out) {

/* do nothing when empty */

}

next_consumed = buffer[out];

out = (out + 1) % BUFFER_SIZE;

/* consume the item in next consumed */

}

This wouldn't work at the moment because buffer is just an array and the

processes would maintain separate copies. buffer needs to be "modified" to be

a region of shared memory. This scheme allows at most BUFFER_SIZE - 1 items in

the buffer at the same time.

Message Passing

Message padding provides a mechanism to allow processes to communicate and

synchronize their actions without sharing the same memory region.

It is particularly useful in a distributed environment, where the communicating

processes may reside on different computers connected by a network.

A message-passing facility provides at least two operations: send(message) and

receive(). If process P and Q want to communicate, a communication link

must exist between them.

Here are few options for the link:

- Direct or indirect communication

- Synchronous or asynchronous communication

- no buffering or automatic buffering.

Direct Communication

In direct communication, each process that wants to communicate must

explicitly name the recipient or sender of the communication.

A communication link in this scheme has the following properties:

- A link can be established between every pair of processes that want to

communicate. The processes need to know each other's identity to communicate.

- A link is associated with exactly two processes.

- Between each pair of processes, there exists exactly one link.

Indirect Communication

With indirect communication, the messages are sent to and received from

mailboxes or ports.

- A mailbox can be viewed abstractly as an object into which messages can be

placed by processes and from which messages can be removed.

- Each mailbox has unique identification.

- For example, POSIX message queues use an integer value to identify a mailbox.

In this scheme, a communication link has the following properties:

- A link is established between a pair of processes only if both members of the

pair have a shared mailbox.

- A mailbox may be associated with more than two processes.

- Between each pair of communicating processes, a number of different links may

exists, with each link corresponding to one mailbox.

Synchronous Communication

Communications between processes takes place through calls to send() and

receive() primitives.

There are different design options for implementing each primitive. Message

passing may be either blocking or non-blocking - also known as synchronous and

asynchrounous.

- Blocking send: After starting to send a message, the sending process is

blocked until the message is received by the receiving process or by the

mailbox.

- Non-blocking send: The sending process send the message and resumes

other operations.

- Blocking receive: After starting to wait for a message, the receiver is

blocked until a message is available.

- Non-blocking receive: The receiver is not blocked while it waits for the

message.

Buffering

Whether communication is direct or indirect, messages exchanged by communicating

processes reside in a temporary queue. Both the sender and receiver maintain a

queue.

- Zero capacity: The queue has a maximum length of zero.

- Bounded capacity: The queue has a finite length

n; thus at most n

messages can reside it in.

- Unbounded capacity: The queue's length is potentially infinite.

The zero-capacity case is sometimes referred to as a message system with no

buffering. The other cases are referred to as message systems with automatic

buffering.

Shared Memory vs Message Passing

Message passing:

Pros:

- Easy to setup and use.

- Can be used over networks and distributed environments.

Cons:

- Kernel needs to be involved in every distinct exchange of data.

- Is slow since messages need to be handled by the kernel.

Shared Memory:

Pros:

- Faster speeds since only required to establish a shared memory region.

- Most flexibility, you can access memory in any way the programmer likes.

Cons:

- More work for programmer since mechanisms needs to be explicitly coded.

- Less suitable for distributed environments - difficult to share memory.

Shared Memory Implementation

With POSIX , shared memory is implemented using memory-mapped file. The code is

based on the instruction here:

wikipedia

- Create a shared-memory object using the

shm_open system call.

- Once the object is created, the

ftruncate function is used to configure the

size of the object in bytes.

- The

mmap function maps a file to a memory section so that file operations

aren't needed.

POSIX Producer:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <sys/shm.h>

#include <sys/stat.h>

int main() {

const int SIZE = 4096; // the size (in bytes) of shared memory object

const char *name = "OS"; // name of the shared memory object

const char *message_0 = "Hello"; // strings written to shared memory

const char *message_1 = "World"; // strings written to shared memory

int shm_fd; // shared memory file descriptor

void *ptr; // pointer to shared memory object

// Create the shared memory object

shm_fd = shm_open(name, O_CREAT | O_RDWR, 0666);

// configure the size of the shared memory object

ftruncate(shm_fd, SIZE);

// memory map the shared memory object

// When the first parameter is set to 0, the kernel chooses the address at

// which to create the mapping. This is the most portable method of

// creating a new mapping.

// The last parameter is the offset. When it is set to 0, the start of the

// file corresponds to the start of the memory object.

// Designed memory protection: PROT_WRITE means it may be written.

// MAP_SHARED indicates that updates to the mapping are visible to other processes.

ptr = mmap(0, SIZE, PROT_WRITE, MAP_SHARED, shm_fd, 0);

// sprintf(): It generates a C string and places it in

// the memory so that ptr points to it.

sprintf(ptr, "%s", message_0);

// Note the NULL character at the end of the string is not included.

// strlen() returns the length of a string, excluding the NULL character

// at the end of the string.

ptr += strlen(message_0);

sprintf(ptr, "%s", message_1);

ptr += strlen(message_1);

return 0;

}

POSIX Consumer:

#include <stdio.h>

#include <stdlib.h>

#include <fcntl.h>

#include <sys/shm.h>

#include <sys/stat.h>

int main() {

const int SIZE = 4096; // the size (in bytes) of shared memory object

const char *name = "OS"; // name of the shared memory object

int shm_fd; // shared memory file descriptor

void *ptr; // pointer to shared memory object

// open the shared memory object

shm_fd = shm_open(name, O_RDONLY, 0666);

// memory map the shared memory object

// Designed memory protection: PROT_READ means it may be read.

ptr = mmap(0, SIZE, PROT_READ, MAP_SHARED, shm_fd, 0);

// read from the shared memory object

printf("%s", (char *)ptr);

// remove the shared memory object

shm_unlink(name);

return 0;

}

When compiling the above programs, you need to link the realtime extensions

library by adding -lrt to the compiling command.

You can use the following functions to work with shared memory:

shm_open()ftruncate()mmap()shm_unlink()

Pipe

Pipe is a type of message-passing interprocess communication method.

There are different types of pipes and each one type has different conditions

and implementations:

-

Unidirectional vs bidirectional: The flow of information is either in one

direction or both directions.

-

Half duplex vs full duplex: If information can flow in both directions,

can it flow in both ways at the same time?

-

Relationship: Does the pipe enforce a relationship between the processes

such as a parent-child relationship.

-

Network communication: Can a pipe be formed over a network or does it

have to reside on the same machine?

Unnamed Pipes (ordinary pipe)

Ordinary pipes allow two processes to communicate in a standard

producer-consumer fashion where the producer writes to one end of the pipe

called the write end and the consumer reads from the other end called the

read end.

Unnamed pipes are:

-

Unidirectional - allow only one-way communication. Two different pipes can be

used to communicate in both ways.

-

A parent-child relationship is required to create a pipe this way. Usually

a parent creates a pipe and uses it to communicate to the child process.

-

As ordinary pipes require a parent-child relationship, this forces the

communication to be on the same machine. That is, network communication is not

allowed.

Ordinary pipes on UNIX systems are created used the pipe(int fd[]) function.

Note, that the fd is used as the variable name as it stands for file

descriptor.

fd[0]: the read end of the pipe.fd[1]: the write end of the pipe.

On UNIX, pipes are just treated as a special type of file. Therefore, we can use

the regular write() and read() functions to write and read from these pipes.

For more details on this topic, you can see:

Analysing Pipe operating In C

Example

#include <sys/types.h>

#include <stdio.h>

#include <string.h>

#include <unistd.h>

#define BUFFER_SIZE 25

#define READ_END 0

#define WRITE_END 1

int main (int argc, char *argv[]) {

char write_msg[BUFFER_SIZE] = "Greetings";

char read_msg[BUFFER_SIZE];

int fd[2];

pid_t pid;

if (pipe(fd) == -1) {

fprintf(stderr, "pipe failed.\n");

return 1;

}

pid = fork();

if (pid < 0) {

fprintf(stderr, "Fork failed.\n");

return 1;

}

if (pid > 0) {

/* close the unused end of the pipe */

close(fd[READ_END]);

write(fd[WRITE_END], write_msg, strlen(write_msg) + 1);

close(fd[WRITE_END]);

} else {

close(fd[WRITE_END]);

read(fd[READ_END], read_msg, BUFFER_SIZE);

printf("read: %s\n", read_msg);

close(fd[READ_END]);

}

return 0;

}

Named Pipes

Named pipes provide a much more powerful communication tool:

- Communication can be bidirectional.

- Communication is only half-duplex (one at a time).

- No parent-child relationship is required.

- Named pipes continue to exist after communicating processes haves terminated.

- Named pipes require that communicating processes reside on the same

machine.

Note: on MS Windows version of named pipe, full-duplex communication is allowed

and the communicating processes may reside on different machines.

Named pipes are referred to as FIFOs in UNIX systems. Once created, they appear

as files in the file system.

Named pipes are created on UNIX systems using the mkfifo() system call and

manipulated using the open(), read(), write() and close() system calls.

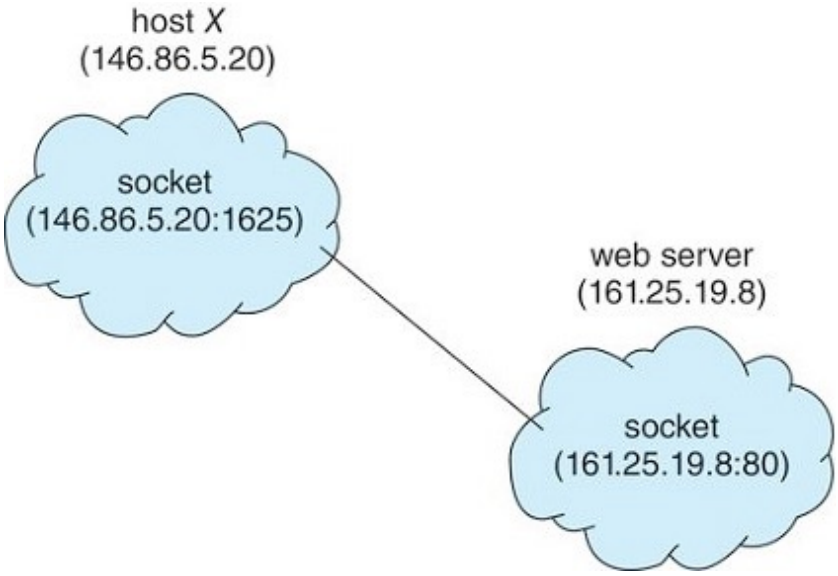

Socket

A socket refers to an endpoint for sending or receiving data across a

computer network. A pair of processes communicating over a network use a pair of

sockets (one for each process).

A socket is identified by an IP address concatenated with a port number, such as

185.139.135.65:5000.

Remote Procedure Call (RPC)

RPC is a special function call. The function (procedure) is called on the local

computer, but the function is executed on the remote computer. Once the function

execution is completed, the result is returned back to the local computer.

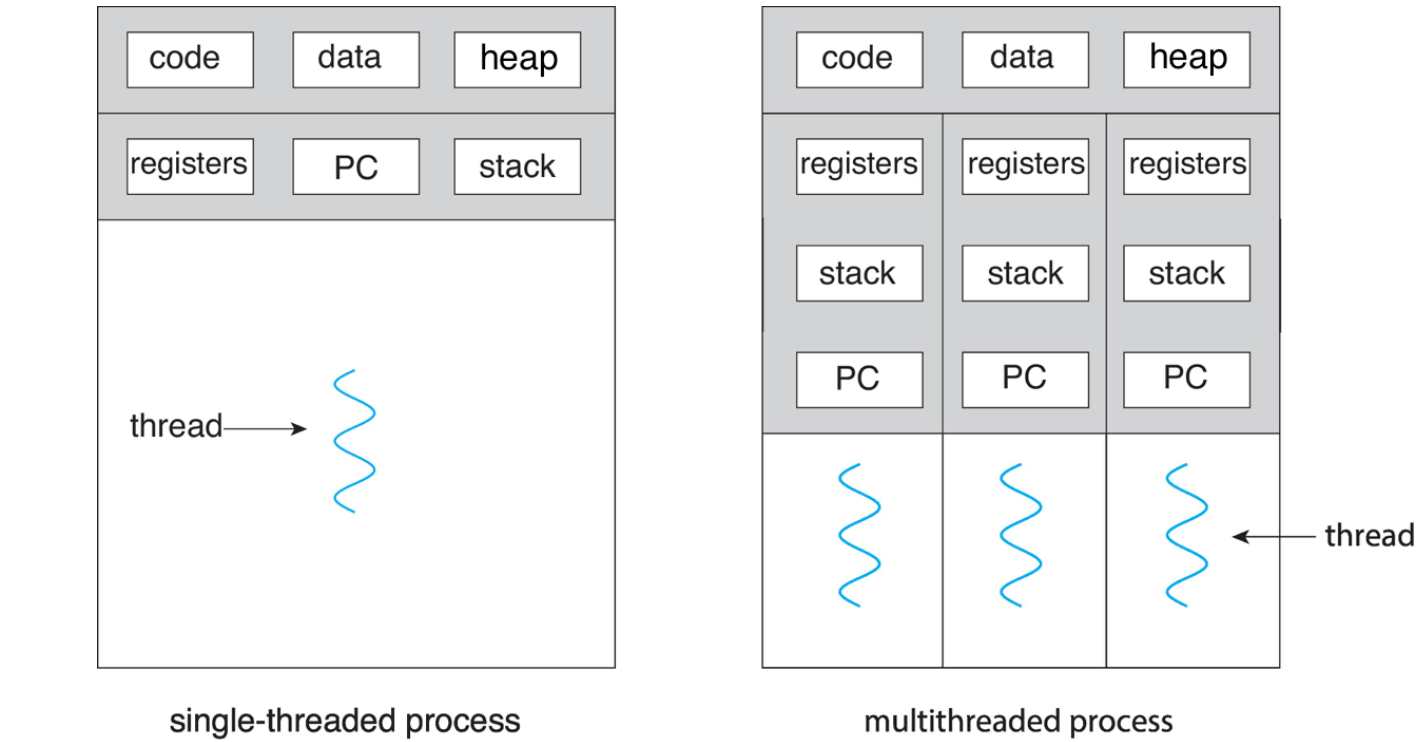

CH4: Threads and Concurrency

A thread is a basic unit of CPU utilization. It involves a thread ID, a

program counter (PC), a set of registers, and a stack.

It shares the following resources with other threads belonging to the same

process:

- Code/Text section

- Data section

- Heap section

- Other OS resources.

Benefits of Multithreaded Programming

-

Responsiveness: Multithreading an interaction application may allow a

program to continue running even if parts of it is blocked or is performing a

length operation.

-

Resource sharing: Threads share memory and the resources of the process

which they belong to. This makes it simpler to move resources between threads

compared to moving resources between processes.

-

Cost: Allocating memory and resources for process creation is costly.

Because threads share the resources of the process to which they belong, it is

more economical to create and context-switch threads.

-

Speed: The benefits of multithreaded programming can be even greater in a

multiprocessor architecture, where threads may run in parallel on different

processing processors.

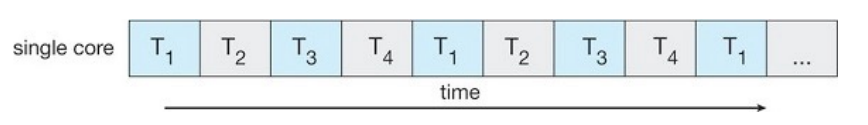

Multicore Programming

Multithreaded programming provides a mechanism for more efficient use of

multiprocessor or multi-core systems.

Consider an application with four threads. On a system with a single computing

core, concurrency merely means that the execution of the thread will be

interleaved over time.

On a system with multiple cores, concurrency means that some threads can run

in parallel, because the system can assign a separate thread to each core.

Concurrency VS Parallelism

-

A concurrent system supports more than one task by allowing all tasks to

make progress using context-switches.

-

A parallel system can perform more than one task simultaneously. Multiple

instructions are being run at a given instant.

Therefore, it is possible to have concurrency without parallelism.

Challenges

Designing new programs that are multithreaded involves the following challenges:

-

Identifying tasks: Find tasks that can be done simultaneously.

-

Balance: Work between tasks should be similar to maximize speed.

-

Data splitting: Data access must be managed as now data may be accessed

by multiple tasks as the same time.

-

Data dependency: When one task depends on data from another, ensure that

the execution of the tasks is synchronized to accommodate the data dependency.

-

Testing and debugging: Testing and debugging multithreaded programs is

inherently more difficult.

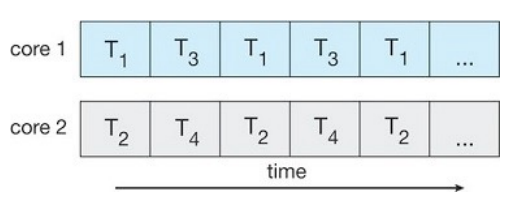

User Level Threads VS Kernel Level Threads

Relationship

For the threads belonging to the same process, a relationship must exist between

user threads and kernel threads. There are three common ways of establishing

such as relationship:

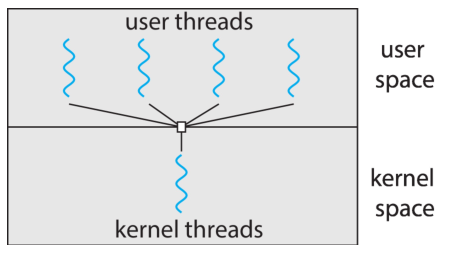

- Many-to-one model

- One-to-one model

- Many-to-many model.

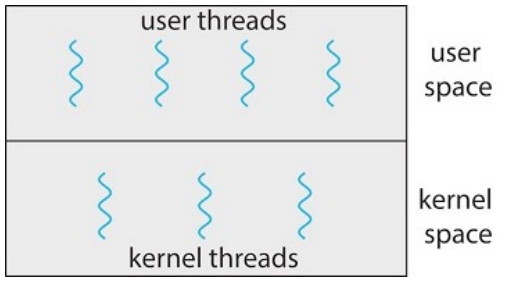

Many-to-One Model

This model maps multiple user-level threads to one kernel level thread.

-

A process is given a single kernel thread. There are multiple user-level

threads. Thread management is done by the thread library in the user space.

-

The entire process is blocked if one threads makes a blocking system call.

-

Multiple threads are unable to run in parallel on multicore systems.

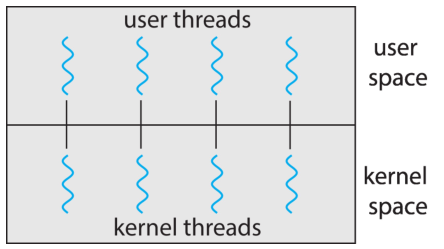

One-to-One Model

This model maps each user-level thread to one kernel level thread.

-

A process includes multiple kernel threads. There are multiple user-level

threads. Each user-level thread is mapped to one kernel thread.

-

It allows one thread to run when another thread makes a blocking system call.

-

It also allows multiple threads to run in parallel on multicore systems.

-

The drawback is that it creates a large number of kernel threads which may

worsen system performance.

Linux and Windows implement the one-to-one model.

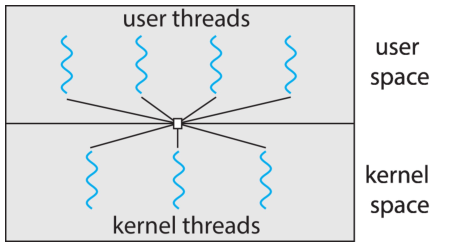

Many-to-Many Model

It multiplexes multiple user-level threads corresponding to a process to a

smaller or equal number of kernel threads corresponding to the same process.

-

The number of kernel threads may depends on a particular application or a

particular application.

-

An application may be allocated more kernel threads on a system with eight

processing cores than a system with four cores.

-

This means the developer can create as many threads as the like and the number

of kernel threads is not too large.

-

However, it is difficult to implement in practice and the increasing number of

processing cores appearing on most systems has reduces the importance of

limiting kernel threads.

Pthreads

Pthreads is a threads extension of the POSIX standard. This may be provided

as either a user-level or a kernel-level library. On Linux, it is implemented as

a kernel level library.

There are two strategies for creating multiple threads: asynchronous threading

and synchronous threading.

Asynchronous threading: Once the parent creates a child thread, the parent

resumes its execution, so that the parent and child execute concurrently and

independently of one another. Asynchronous threading is commonly used for

designing responsive user interfaces.

Synchronous threading: It occurs when the parent thread creates one or more

children and then must wait for all of its children to terminate before it

resumes. Typically, synchronous threading involves significant data sharing

among threads.

Example

Note: To compile programs using pthread.h, we need to include the -lpthread

argument to include the threading library when compiling our program.

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

int sum;

void *runner(void *param) {

int upper = atoi(param);

sum = 0;

for (int i = 1; i <= upper; i++) {

sum += i;

}

pthread_exit(0);

}

int main(int argc, char *argv[]) {

pthread_t tid;

pthread_create(&tid, NULL, runner, argv[1]);

pthread_join(tid, NULL);

printf("sum = %d\n", sum);

return 0;

}

Lifetime

Each C program starts with one thread, which corresponds to the main()

function. When the main() thread terminates, all threads belonging to the same

program will terminate. In general, a thread terminates if its parent thread

terminates.

Thread Count

Hyperthreading: Hyperthreading is Intel's simultaneous multithreading (SMT)

implementation used to improve parallelization of computations performed on x86

microprocessors. With hyperthreading, one physical core appears as two virtual

cores to the operating system, allowing concurrent scheduling of two threads per

core.

Thread Count: Theoretically, the total number of threads should be equal to

the number of cores of the system for optimal performance. On systems that

support hyperthreading, the number should be equal to twice the number of cores.

However, practically, the optimal number of threads vary on bunch of parameters

such as other running programs and the scheduling algorithm. Therefore, it is

best to experiment and find the best one.

Implicit Threading

Designing multithreaded programs involve many challenges, one way to address

these difficulties is to transfer the creation and management of threading from

application developers to compilers and run-time libraries. This strategy,

termed implicit threading, is an increasingly popular trend.

Implicit threading involves identifying tasks rather than threads. Once

identified, this is passed to the library which decides the optimal number of

threads to run. The library handles the thread creation and management.

The general idea behind a thread pool is to create a number of threads at

startup and place them into a pool, where they sit and wait for work. When the

OS receives a computation request, rather than creating a thread, it instead

submits the request to the thread pool and resumes, waiting for additional

requests.

Thread pools offer these benefits:

-

Servicing a request with an existing thread is often faster than waiting and

thereafter creating a thread.

-

A thread pool limits the number of threads that exists at any time point. This

is particularly important systems that cannot support large number of

concurrent threads.

-

Thread creation and management is transferred from application developers to

compilers and run-time libraries.

The numbers of threads in the pool can be set heuristically according to factors

such as:

- The number of CPUs in the system

- The amount of physical memory

- The expected number of concurrent client requests.

OpenMP

Open Multi-Processing (OpenMP) is an API for programs written in C, C++, or

FORTRAN, which provides support for parallel programming in shared-memory

environments.

OpenMP identifies parallel regions as blocks of code that may run in parallel.

Application developers insert compiler directives into their code at parallel

regions, and these directives instruct the OpenMP run-time library to execute

the region in parallel.

Example:

#include <stdlib.h>

#include <stdio.h>

#include <omp.h>

#define BUF_SIZE 1000000000

int main(int argc, char *argv[]) {

int *a = malloc(BUF_SIZE * sizeof(int));

int *b = malloc(BUF_SIZE * sizeof(int));

int *c = malloc(BUF_SIZE * sizeof(int));

// Initialize arrays

#pragma omp parallel for

for (int i = 0; i < BUF_SIZE; i++) {

a[i] = 3;

b[i] = 7;

c[i] = 0;

}

#pragma omp parallel

{

printf("I am a parallel region.\n");

}

#pragma omp parallel for

for (int i = 0; i < BUF_SIZE; i++) {

c[i] = a[i] + b[i];

}

long long sum = 0;

#pragma omp parallel for reduction(+:sum)

for (int i = 0; i < BUF_SIZE; i++) {

sum += c[i];

}

printf("total sum: %lld\n", sum);

free(a);

free(b);

free(c);

return EXIT_SUCCESS;

}

The output of which is:

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

I am a parallel region.

total sum: 10000000000

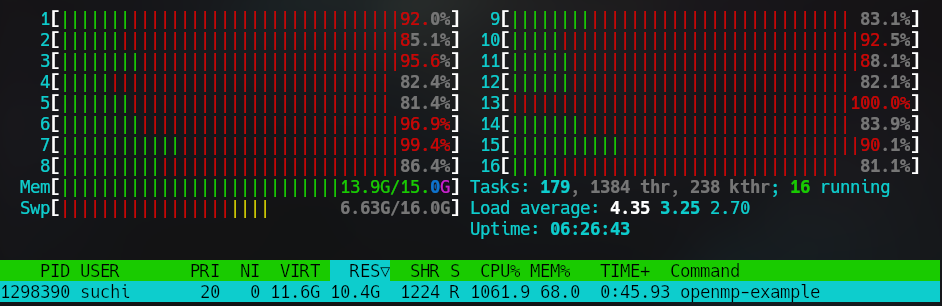

Here we see that the string is printed 16 times. This is because the machine

that this code was run on has 16 threads. The for-loops are also executed

in parallel for initialization and summation.

In this code, the #pragma omp parallel for reduction(+:sum) directive tells

OpenMP to parallelize the loop and use the sum variable to accumulate the total

sum. Each thread will have its own local copy of sum, and at the end of the

parallel region, OpenMP will automatically sum up all these local copies into

the original sum variable. The + in reduction(+:sum) specifies that the

reduction operation is summation.

Here is my CPU while the process is running:

I wrote a single threaded version without using openmp and compared their

running times. The single threaded version took 22 seconds to execute where as

the openmp version only took 6 seconds. It was also clear that the single

threaded version was only using one core at any given time.

Here we clearly see that all my threads are being used. If you notice the code

doesn't have too many details about the parallelization - just a few lines about

the type of parallelization that we require.

CH5: CPU Scheduling

CPU scheduling is the basis of multiprogrammed operating systems. On modern

operating systems, it is kernel-level threads (no processes) that are in fact

being scheduled by the operating system. However, the terms "process scheduling"

and "thread scheduling" are often used interchangeably.

Typically, process execution alternates between two states:

- CPU burst: Where a process is mainly using the CPU.

- I/O burst: Where a process is performing I/O operations.

A process flips between CPU bursts and IO bursts. Eventually the final CPU

burst ends with a system request to terminate execution.

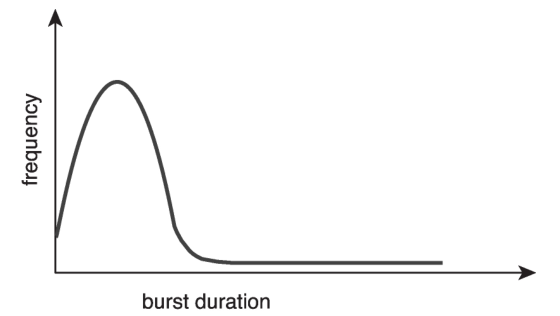

Although the durations of CPU bursts vary greatly from process to process.

However, most processes tend to have frequency curve similar to that shown in

the following figure:

The curve is generally characterized as exponential or hyper exponential, with a

large number of short CPU bursts and a small number of long CPU bursts.

Whenever the CPU becomes idle, the operating system must select one of the

processes in the ready queue to be executed. The selection process is carried

out by the CPU scheduler, which selects a process from the processes in memory

that are ready to execute and allocates the CPU to that process.

The ready queue is a list of processes ready to be executed which is usually

records of process control blocks (PCBs) of the processes.

Preemptive VS Nonpreemptive

-

Nonpreemptive schedulers: once the CPU has been allocated to a process,

the process keeps the CPU until it releases it either by terminating or by

switching to the waiting state.

-

Preemptive schedulers: after the CPU has been allocated to a process, the

process could possibly lose the CPU before it terminates or switches to the

waiting state.

Dispatcher

The dispatcher is the module that gives control of the CPU's core to the

process selected by the CPU scheduler. This function involves the following

steps:

- switching context

- switching to user mode

- jumping to the proper location in the user program to restart the program.

The time it takes for the dispatcher to stop one process and start another

running is known as the dispatch latency.

Scheduling Algorithms

You'll find all the scheduling algorithms coded in C in the following

repository:

GitHub.

Evaluation Criteria For CPU Schedulers

Here are some typical criteria used to rank algorithms:

- CPU utilization: the percentage of the time when CPU is utilized.

- Throughput: the number of processes that complete their execution per unit

of time.

- Turnaround time: the interval from the time of submission of a process to

the time of completion.

- Waiting time: sum of the time spent waiting in the ready queue.

- Response time: the time from the submission of a process until the first

response is produced.

First-Come First-Served (FCFS)

The simplest CPU-scheduling algorithm is the first-come first-severed (FCFS)

scheduling algorithm. With this scheme, the process that requests the CPU first

is allocated first.

The implementation of the FCFS policy is easily managed with a FIFO queue. When

a process enters the ready queue, its PCB is linked onto the tail of the queue.

When the CPU is free, it is allocated to the process at the head of the queue.

The running process is then removed from the queue.

The negative with such an algorithm is that the average waiting time under the

FCFS policy is often quite long.

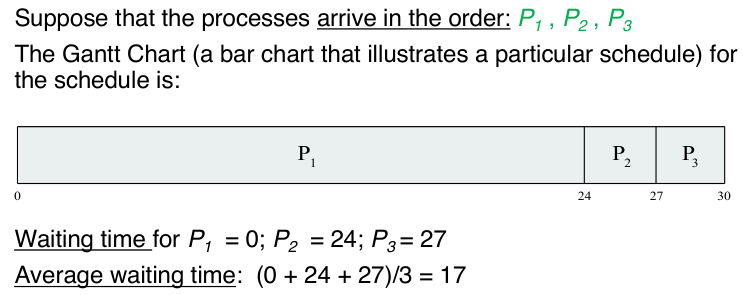

Suppose the processes arrive in the order: P1, P2, P3.

This algorithm suffers from the "convoy effect". The convoy effect is a

scheduling phenomenon in which a number of processes wait for one process to get

off a core, causing overall device and CPU utilization to be suboptimal.

Overview of FCFS:

- Simple implementation

- Nonpreemptive nature

- Long average waiting time

- Experiences convoy effect

- Bad for interactive systems.

Here is the algorithm written for a simulation of CPU scheduler:

Github FCFS

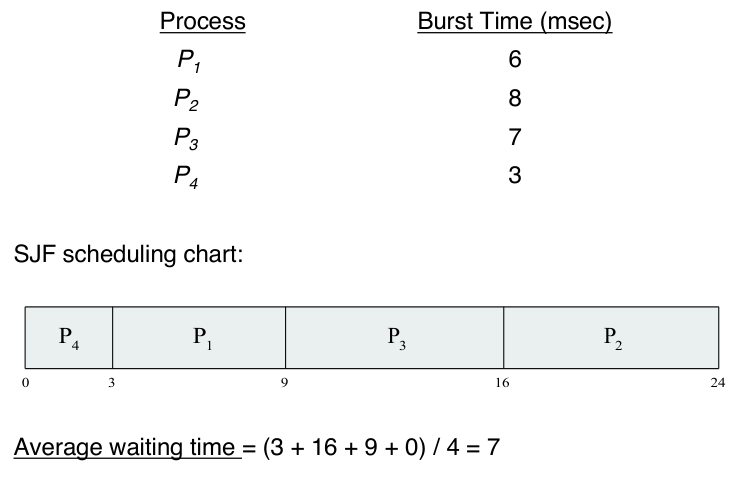

Shortest Job First (SJF)

The shortest-job-first (SJF) is an algorithm that schedules the job with the

shortest CPU burst first. When the CPU is available, it is assigned to the

process that has the smallest next CPU burst. If two processes have the same CPU

burst then FCFS scheduling is used to break the tie.

The SJF scheduling algorithm is provably optimal, in that it gives the minimum

average waiting time for a given set of processes.

Here is the algorithm written for a simulation of CPU scheduler:

Github Nonpreemptive SJF

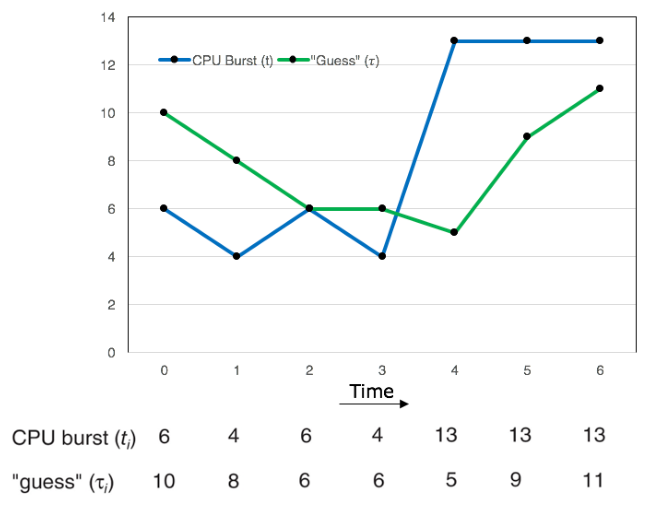

Predicting Next CPU Burst

However, we need to know the next CPU burst before we schedule algorithms based

on this. But, we can't know the CPU burst of a process before it is scheduled.

Therefore, we use a predicted CPU burst to schedule algorithms.

The following notations are used in the prediction:

- \(t_n\): the length of the current CPU burst.

- \(\tau_n\): the predicted value of the current CPU burst.

- \(\tau_{n+1}\): the predicted value for the next CPU burst.

- \(\alpha\): a tuning parameter \((0 \leq \alpha \leq 1)\), typically, \(\alpha = 1/2\).

The following equation can be used to predict the next CPU burst:

\[

\tau_{n+1} = \alpha t_n + (1 - \alpha)\tau_n

\]

This equation adds a fraction of the previous prediction and the previous CPU

burst to calculate the predicted CPU burst. The parameter \(\alpha\) controls

the relative weight of the previous CPU burst in our prediction. So, the highest

the value of \(\alpha\), the more we want \(t_n\) to affect our prediction.

The initial \(\tau_0\) can be defined as a constant or as an overall system

average.

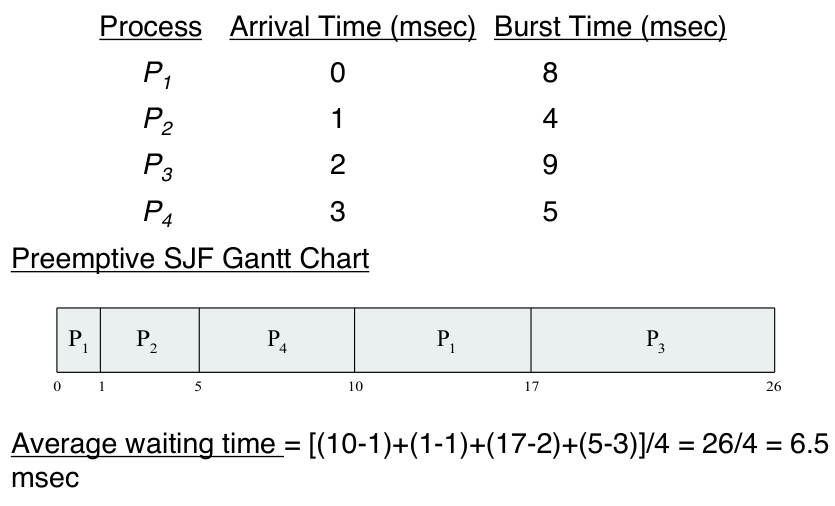

Preemptive SJF

The SJF algorithm can be either preemptive or non-preemptive.

Consider a situation where a process arrives at the ready queue with a shorter

CPU burst when a process is currently running. If the scheduler considers

removing the current process and scheduling the new one then it is preemptive.

If the scheduler will always allow the process running to finish before

scheduling the next process then it is non-preemptive.

Here is an example:

Here we see that process \(P_1\) was scheduled but when \(P_2\) arrived, it has

a shortest CPU burst than the remaining CPU burst of \(P_1\), and therefore

replace \(P_1\).

Here is the algorithm written for a simulation of CPU scheduler:

Github Preemptive SJF

Round Robin (RR)

The round-robin (RR) scheduling algorithm is similar to FCFS scheduling, but

preemption is added to enable the system to switch between processes.

Each process, when scheduled given a small unit of time, called a time

quantum or time slice that it can run for. Once the time quantum is done,

another process is scheduled. The ready queue is treated as a circular queue.

The CPU scheduler goes around the ready queue, allocating the CPU to each

process for a time interval of up to 1 time quantum.

If the CPU burst of the currently running process is longer than 1 time quantum,

the timer will go off and will cause an interrupt to the operating system. A

context switch will be executed, and the process will be put at the tail of

the ready queue. The CPU scheduler will select the next process in the ready

queue. Thus, the RR scheduling algorithm is thus preemptive.

Here is the algorithm written for a simulation of CPU scheduler:

Github Round Robin

The average waiting time is typically high in this policy.

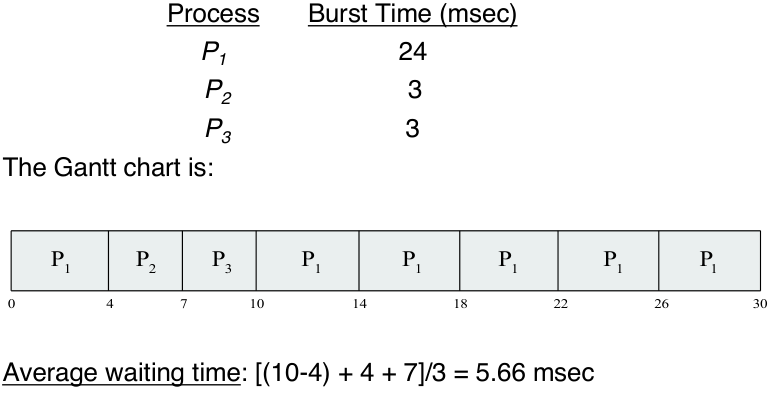

Example:

The performance of the round robin algorithm depends heavily on the size of the

time quantum. If the time quantum is extremely large then the policy behaves the

same as the FCFS policy. In contract, if the time quantum is extremely small,

the policy can result in a large number of context switches.

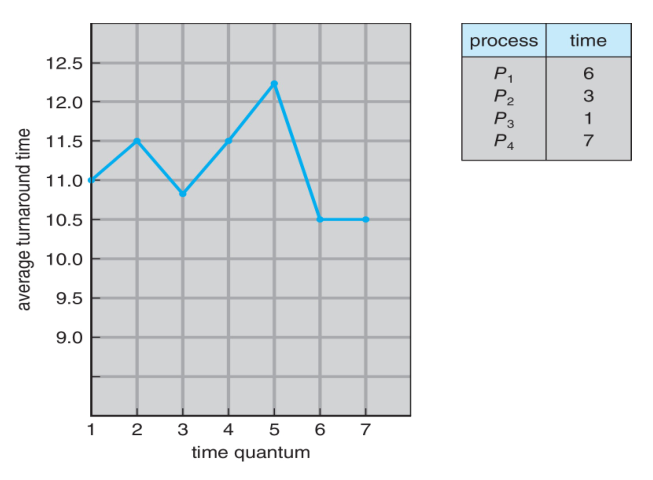

The turnaround time also depends on the size of h time quantum. The

following figure shows the average turnaround time for a set of processes for a

given time quantum:

We can see that turnaround time does not necessarily improve as the time-quantum

size increases.

In general, the average turnaround time can be improved if most processes finish

their next CPU burst in a single time quantum. The time quantum should be large

compared with the context-switch time, but it should not be too large. A rule of

thumb is that 80 percent of the CPU bursts should be shorter than the time

quantum.

Priority Scheduling

A priority is associated with each process, and the CPU is allocated to the

process with the highest priority. Processes with equal priority are scheduled

in FCFS order.

In this course, we assume that a low number represents a high priority. However,

this is only a convention.

-

Priorities can be defined either internally or externally.

-

Internal priorities use some measurable quantity or quantities to compute the

priority of the process. Example, memory requirements, open files, etc.

-

External priorities are set by criteria outside the operating system. On

Linux you can use the nice command to change the priority of a process.

A major problem with priority scheduling algorithms is indefinite blocking

or starvation. This is when a low-priority task is never scheduled because

higher priority tasks keep showing up.

A solution to this problem is aging. This involves gradually increasing the

priority of the processes that wait in the system for a long time.

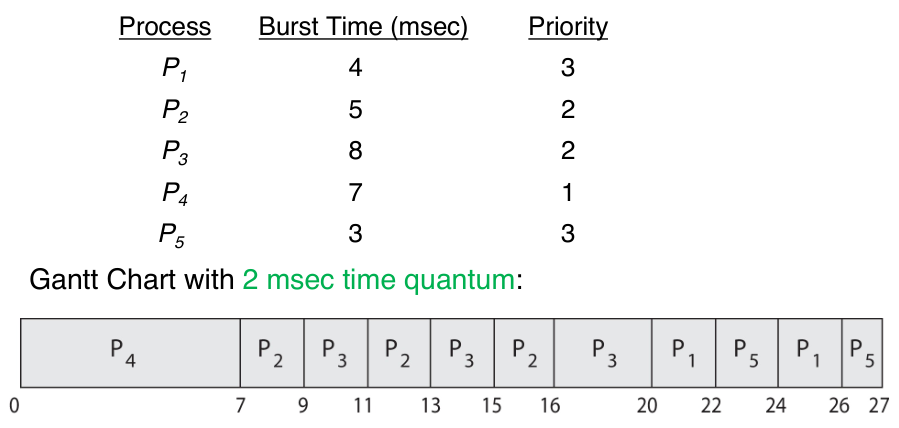

Priority Scheduling with Round-Robin

Priority scheduling can be combined with the round-robin scheduling so that the

system executes the highest-priority process using priority scheduling and runs

processes with the same priority using round-robin scheduling.

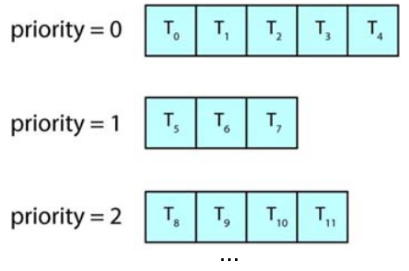

Multilevel Queue Scheduling

With priority scheduling, all processes may be placed in a single queue and the

scheduler selects the process with the highest priority to run. Depending on how

the queues are managed, an \(O(n)\) search may be necessary to determine the

highest-priority process.

In practice, it is often easier to have separate queues for each distinct

priority, which is known as a multilevel queue.

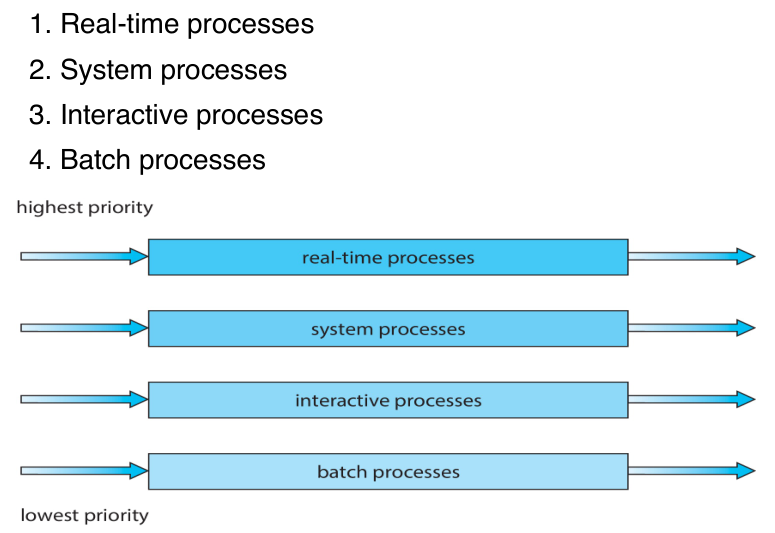

A multilevel queue could be used to achieve a flexible process scheduling. A

multilevel queue scheduling algorithm typically partitions processes into

several separate queues based on the process type. For example, separate queues

can be maintained for background and foreground processes.

Each queue could have its own scheduling algorithm. For example, the foreground

queue could be scheduled by an RR algorithm while the background queue could be

scheduled by FCFS algorithm.

Another possibility is to time-slice among the queues where each queue gets a

certain portion of the CPU time, which it can then schedule among its various

processes. For instance, in the foreground-background queue example the

foreground queue can be given 80 percent of the CPU time and the background

queue gets 20 percent of CPU time.

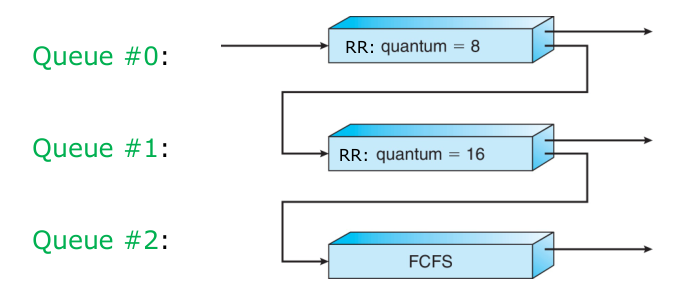

Feedback Based Scheduling

Normally, when the multilevel queue scheduling algorithm is used, processes are

permanently assigned to a queue when they enter the system. This setup has the

advantage of low scheduling overhead but it is inflexible.

The multilevel feedback queue scheduling algorithm, in contrast, allows a

process to move between queues. The idea is to separate processes according to

the characteristics of their CPU bursts.

If a process uses too too much CPU time, it will be moved to a lower-priority

queue. This scheme leaves I/O bound and interactive processes in the

higher-priority queues.

In addition, if a process that waits too long in a lower-priority queue may be

moved to a higher-priority queue. This is a form of aging prevents starvation.

For example, consider a multilevel feedback queue scheduler with three queues,

numbered from 0 to 2. The scheduler first executes all processes in queue 0.

Only when queue 0 is empty will it execute processes in queue 1. A process in

queue 1 will be preempted by a process arriving for queue 0. A process that

arrives for queue 1 will preempt processes in queue 2.

-

An entering process is put in queue 0.

- A process in queue 0 is given a time quantum of 8 milliseconds.

- If it does not finish within this time window, it is moved to the tail of

queue 1.

-

If queue 0 is empty, the process at the head of queue 1 is given a quantum of

16 milliseconds.

- If it does not complete within the time window, it it moved to tail of

queue 2.

- If a process arrives in queue 0 then a process is queue 1 is preempted.

-

If queue 0 and 1 are empty, the processes in queue 2 are run on an FCFS

basis.

- If a process arrives in queue 0 or 1, then a process is queue 2 is preempted.

-

To prevent starvation, a process that waits too long in a lower-priority

queue may gradually be moved to a higher-priority queue.

The definition of a multilevel feedback queue scheduler makes it the most

general CPU-scheduling algorithm. However, this also makes it the most complex

algorithm.

Thread Scheduling

On most modern operating systems, it is kernel-level threads (not processes)

that are being scheduled by the operating system.

For systems using many-to-one models, two steps are required to allocate the CPU

to a user-level thread:

-

Process contention scope (PCS): Locally, the threads belonging to the same

process are scheduled so that a user-level thread could be mapped to a

kernel-level thread.

-

System Contention Scope (SCS): Globally, kernel-level threads are

scheduled so that the CPU could be allocated to a kernel-level thread.

For systems using a one-to-one mode, such as Windows and Linux, only SCS is

required.

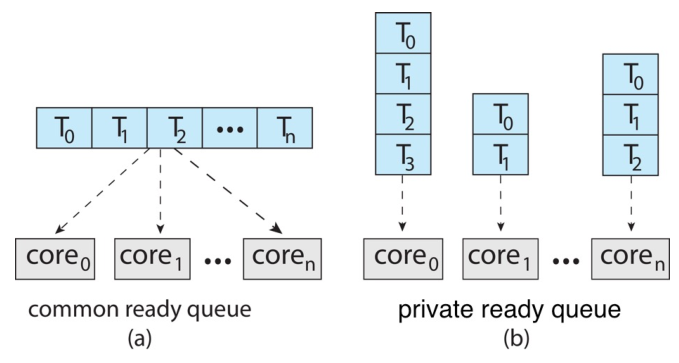

Multiple-Processor Scheduling

The scheduling algorithms we have discussed only talk about how to schedule

processes assuming a single processor. But most computers today have multiple

processing cores, how do we handle such situations?

-

Asymmetric Multiprocessing: A single processor, the master server,

handles all scheduling decisions, I/O processing, and other system activities.

The other processors only execute user code. This method is simple to

implement because only one processor accesses the system data structures,

reducing the need for data sharing. However, this potentially becomes a

bottleneck that could affect overall system performance.

-

Symmetric Multiprocessing (SMP): It is the standard scheme for a

multiprocessor system. Each processor is self-scheduling. Namely the scheduler

for each processor examine the ready queue and select a process to run.

With SMP, there are two possible strategies to organize the processes eligible

to be scheduled. Either we have a single ready queue or each processor is given

its own private ready queue.

Since strategy (b) does not require any synchronization to access the queue, it

is the most common approach on systems supporting SMP.

Load Balancing

Load balancing attempts to keep the workload evenly distributed across all

processors in an SMP system. This is an important consideration to fully utilize

the benefits of having multiple processors.

Load balancing is typically necessary only on systems where each processor has

its own private ready queue of eligible processes to execute. On systems with a

common queue, load balancing is unnecessary.

There are two general approaches to load balancing: push migration and pull

migration.

-

Push Migration: a specific task periodically checks the load on each

processor; if it finds an imbalance, it evenly distributes the load by moving

processes from overloaded to idle (or less-busy) processors.

-

Pull Migration: in this case, an idle processor pulls a waiting process

from a busy processor.

Pull and push migration do not need to be mutually exclusive and are, in fact,

often implemented in parallel on load-balancing systems. For example, the Linux

process scheduler, Completely Fair Scheduler (CFS), implements both

techniques.

Processor Affinity

On most systems, each processor has its own cache memory. When a process has

been running on a specific processor the data most recently accessed by the

process populate the cache of the processor. As a result, successive memory

accesses by the process are often satisfied by cache memory.

If a process migrates to another processor, say, due to load balancing the

contents of the cache memory must be invalidates for the first processor, and

the cache for the second processor must be repopulated.

Because of the high cost of invalidating and repopulating cache, most operating

systems with SMP support try to avoid migrating a process from one processor to

another. This is known as processor affinity. A process has an affinity for

the processor on which it is currently running. There is an attempt to always

assign the same processor to a given processor.

Examples

Process scheduling in Linux:

-

Until Version 2.5: The Linux kernel ran a variation of the traditional

UNIX scheduling algorithm. This algorithm was not designed with SMP systems in

mind, it did not adequately support systems with multiple processors.

-

With Version 2.5: The scheduler was overhauled to include a schedulling

algorithm known as \(O(1)\) that run in constant time regardless of the number

of tasks in the system. This also provided increased support for SMP systems,

including processor affinity and load balancing between processors. This leads

to excellent performance on SMP systems but leads to poor response times for

interactive processes that are common on many desktop computer systems.

-

With Version 2.6 In release 2.6.23 of the kernel, the Completely Fair

Scheduler (CFS) became the default Linux scheduling algorithm.

Algorithm Evaluation

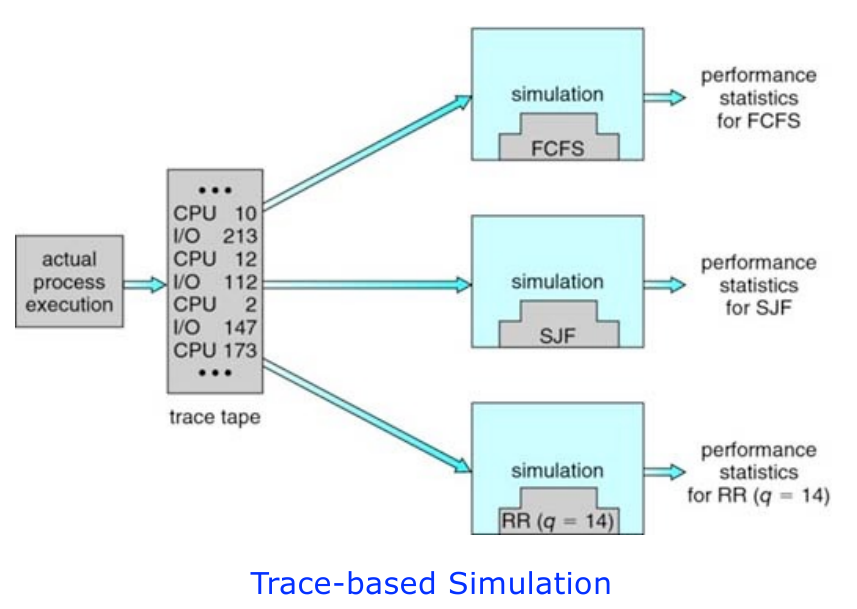

-

Deterministic Modeling: an evaluation method for scheduling algorithms.

This method takes a particular predetermined workload and generates the

performance of an algorithm under that workload. However, it requires exact

numbers for input and its answers only apply to those specific cases. These

methods can be used to indicate trends that can be analyzed.

-

Simulations: Running a simulation involves programming a model of the

computer system. The data to drive the simulation can be generated in several

ways. The most common method uses a random-number generator that is programmed

to generate processes, CPU burst times, arrivals, departures, and so on.

- We can use trace files to monitoring the real systems and recording the

sequence of actual events. We then use this sequence to drive the

simulation.

Processes can be executed concurrently or in parallel. Context switch could be

carried out rapidly in order to provide concurrent execution. This means that

one process may only be partially completed before another process is

scheduled. Two different processes could be executed simultaneously on separate

processing cores.

We need to solve issues involving the integrity of data shared by several

processes.

Race Condition: A race condition occurs when two or more process can access

shared data and they try to change it at the same time. Because the process

scheduling algorithm can swap between processes at any time, you don't know the

order in which the processes will attempt to access the shared data. Therefore,

the result of the change in data is dependent on the thread scheduling

algorithm, i.e. both threads are "racing" to access/change the data.

Source For Race Condition

Here is a good YouTube Video that

explains race conditions and dead-locks.

Critical Section Problem

Consider a system with \(n\) processes \(\{p_0, p_1, \dots, p_{n-1}\}\). Each

process has a segment of code, called a critical section, which accesses and

updates data that is shared with at least one other process.

The important feature of the system is that, when one process is running in its

critical section, no other process is allowed to run in its critical section.

That is, no two processes run in their critical section at the same time.

The critical-section problem is about designing a protocol that the prcoesses

can use to synchronize their activity so as to cooperatively share data. With

this protocol, each process must request permission to enter its critical

section.

- Entry section: The section of code implementing the request.

- Exit section: The critical section may be follow by an exit section.

- Remainder section: the remaining code is the remainder section.

A solution must satisfy the following three requirements:

-

Mutual Exclusion: If process \(P_i\) is running in its critical section,

then no other processes can run in their critical sections.

-

Progress: If no process is running in its critical section and some

processes wish to enter their critical sections, then only those processes

that are not running in their remainder sections can participate in the

procedure of selecting a process to enter its critical section next, and

this selection cannot be postponed indefinitely.

-

Bounded Waiting: There exists a bound, or limit, on the number of times

that other processes are allowed to enter their critical sections after a

process has bade a request to enter its critical section and before that

request is granted.

Peterson's Solution

A software-based solution to the critical-section problem.

Important Note: However, because of the way modern computer architectures

perform basic machine-language instructions, there is no guarantee that

Peterson's solution will work correctly with such architectures. This is

primarily because to improve system performance, processors and/or compilers may